Foreword

Artificial intelligence (AI) has emerged as a revolutionary tool penetrating all parts of society, including science, with a remarkable acceleration in the past two years. AI brings forward powerful opportunities but also challenges and risks, emphasising the importance of adapted policies.

At this crucial time, the EU AI Act promises to be the first-ever legal framework on AI, positioning the continent in a leading role to address the risks of the technology. However, at the time of publication of this report, it appears that many of the challenges of AI used in scientific research will not fall under the regulations of the AI Act.

AI applications are rapidly permeating scientific research in practically all fields, accelerating scientific discovery and innovation through colossal datasets and access to extremely powerful computing infrastructures. Parallel to the enormous opportunities of AI for science, discussions and debates are ongoing in universities, weighing opportunities against the potential risks of AI for the reliability, reproducibility and transparency of scientific production and our future knowledge base.

In July 2023, the College of Commissioners asked the Scientific Advice Mechanism to the European Commission to provide evidence-based advice on how to accelerate a responsible uptake of AI in science. To address this question, SAPEA assembled an independent, international, and interdisciplinary working group of leading experts in the field, nominated by and selected from European academies and their respective networks. Between October 2023 and January 2024, the working group reviewed and compiled the latest evidence on the subject to create this thirteenth SAPEA Evidence Review Report. This report informs the accompanying Scientific Opinion of the Group of Chief Scientific Advisors, which contains the requested policy recommendations.

This project was coordinated by Euro-CASE acting as the lead network on behalf of SAPEA. We warmly thank all working group members for their voluntary contributions and dedication, and especially the co-chairs of the SAPEA working group, Professors Anna Fabijańska and Andrea Emilio Rizzoli. We would also like to express our sincere gratitude to all experts involved in the process of evidence-gathering and peer review, and everyone else involved in pulling this report together.

Finally, we would also like to express our sincere gratitude to the academies across Europe, for their contribution in bringing together the outstanding experts who formed the working group.

Tuula Teeri, Chair of the Euro-CASE Board

Patrick Maestro, Secretary General of Euro-CASE

Stefan Constantinescu, President of the SAPEA Board

Preface

The rapid advancement of artificial intelligence is driving transformative impact across numerous scientific fields. It has also opened new frontiers for research across various disciplines. From facilitating extensive experimental data analysis to generating novel scientific hypotheses from literature, AI has the potential to revolutionise scientific discovery, accelerate research progress and boost innovation.

As artificial intelligence continues its remarkable evolution, a deeper understanding of its potential impact on science is crucial for researchers and policymakers to ensure its responsible adoption and use.

This evidence review report contributes to ongoing debate on how artificial intelligence can be harnessed for scientific advancement while addressing potential challenges and risks associated with its adoption. It examines the issue of responsible and timely AI uptake in science in Europe. Specifically, it analyses the current landscape, examines existing challenges and opportunities associated with AI adoption in science, analyses the impact of AI on researchers’ work environments and skills, and proposes policy options to address challenges identified.

This report draws upon a comprehensive evidence base established through an extensive literature review and further enriched by three expert workshops held between late 2023 and early 2024.

We extend our gratitude to all working group members, SAPEA’s report writing team and contributors for their dedication and hard work in completing this report within a concise timeframe. Additionally, we thank the experts who participated in the workshops, providing valuable insights and expertise that greatly enriched the analysis presented in this report.

Anna Fabijańska, co-chair of the working group

Andrea Emilio Rizzoli, co-chair of the working group

Members of the working group

- Anna Fabijańska, Lodz University of Technology, Poland (co-chair)

- Andrea Emilio Rizzoli, Istituto Dalle Molle di studi sull’Intelligenza Artificiale (USI-SUPSI), Switzerland (co-chair from 19 September 2023)

- Paul Groth, University of Amsterdam, The Netherlands

- Patrícia Martinková, Czech Academy of Sciences, Czechia

- Arlindo Oliveira, Instituto Superior Técnico, Portugal

- Karen Yeung, Birmingham Law School, UK

- Virginia Dignum, Umeå University, Sweden (co-chair and working group member until 7 September 2023, involved in the selection committee)

The above experts were identified with the support of:

- The Academy of Engineering of Portugal

- The Academy of Sciences of Lisbon

- The Czech Academy of Sciences

- The Polish Academy of Sciences

- The Royal Netherlands Academy of Arts and Sciences

- The Royal Swedish Academy of Engineering Sciences

- The Swiss Academies of Arts and Sciences

- The Young Academy of the Polish Academy of Sciences

Executive summary

This SAPEA evidence review report gathers the relevant scientific evidence to analyse:

How can the European Commission accelerate a responsible uptake of AI in science (including providing access to high-quality AI, respecting European Values) in order to boost the EU’s innovation and prosperity, strengthen the EU’s position in science, and ultimately contribute to solving Europe’s societal challenges?

Specifically, the report approaches the topic through the lens of AI’s impact on:

- scientific process, including the underlying principles upon which the scientific endeavour is organised

- people, including the skills, competencies, and infrastructure needed by scientists of tomorrow

- policy design, in the context of ensuring a timely, responsible, and innovative uptake of AI in science in Europe

In the rapidly-evolving field of AI, there is no universally accepted definition, nor a clear taxonomy outlining its various branches. Establishing such a definition would facilitate international collaboration among different countries. Therefore, recently, OECD countries have agreed to define an AI system as:

a machine-based system that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. Different AI systems vary in their levels of autonomy and adaptiveness after deployment.

As AI applications permeate across many sectors, including in research, it is imperative that the EU takes hold of the opportunities, acts upon the challenges, and safeguards citizens from the risks that this fast-evolving technology can bring. As a companion effort to the EU’s regulatory AI Act in progress, which is working to promote the uptake of human-centric and trustworthy AI while ensuring a high level of protection of health, safety and fundamental rights (per AI Act Recital 1) in Europe, the European Commission aims to understand the specifics of AI technology not only developed by science, but as applied to science; that is, AI in science. This report reviews current evidence and potential policies that could support the responsible and timely uptake of AI in science in the EU that may enhance the EU’s innovation and prosperity. The scope of this report is confined to the takeup of AI in scientific research, rather than its implications for society more generally. In particular, it does not address the manifold and very significant challenges that arise from the growing and rapid deployment of AI technologies in specific social domains, and which fall outside the scope of this report and which the EU’s AI Act is intended to address.

Landscape of AI in research and innovation

Computational power

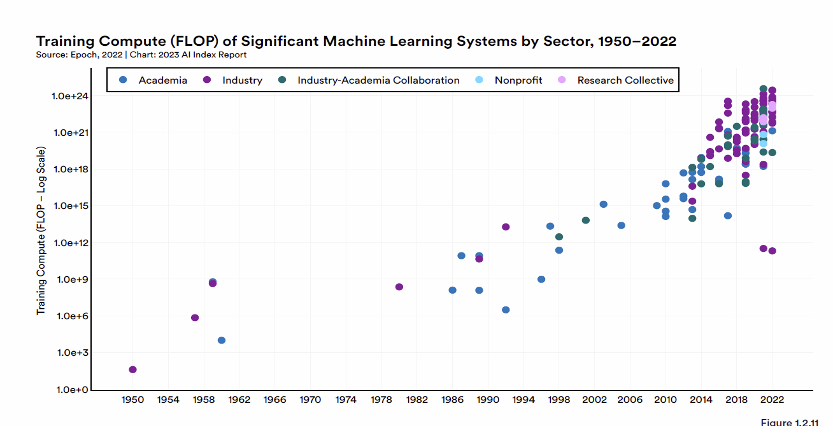

The computational power (‘compute’) required for advanced machine learning systems has increased exponentially for many decades and in particular since 2010, ultimately leading to a divide between academia and industry regarding access to specialised software, hardware, and skilled workforce. Academic institutions released the most significant machine learning systems until 2014, but industry has now taken the lead. Generative AI models (especially large language models (LLMs) and diffusion models), convolutional neural networks for vision applications and models trained using deep reinforcement learning, widen the gap between industry and research due to the huge computational resources needed to train them. Governments are investing in computing capacity, but lag behind private sector efforts. Newcomers, startups and AI research laboratories frequently build on big tech’s cloud services to train models and launch products.

Data

Besides compute, data is a crucial resource for AI development. However, the need to comply with copyright laws and research ethics requirements create challenges for public institutions in obtaining and processing data. While efforts are being made to provide fair and equal access while preserving privacy and ownership rights, issues remain unresolved.

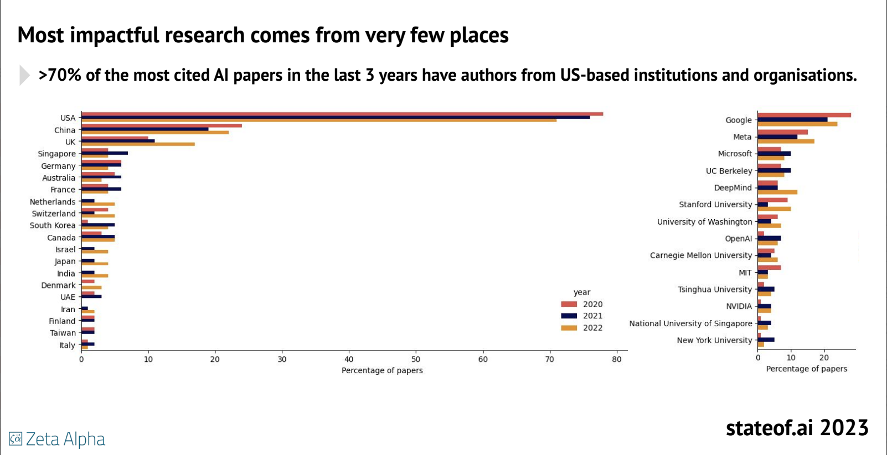

Geopolitics of AI

The USA, China and the UK (ranking third, but lagging far behind the first two) dominate AI research globally, but other European countries contribute significantly. China leads in scientific domains where AI plays a prominent role, while the USA excels in health-related fields and the EU in social sciences and humanities. In 2022, the USA had more authors contributing to significant machine learning systems than other countries like China and the UK. The top-cited AI papers are from companies like Google, Meta, and Microsoft. The growth rate of AI-related publications is much faster than overall scientific production globally.

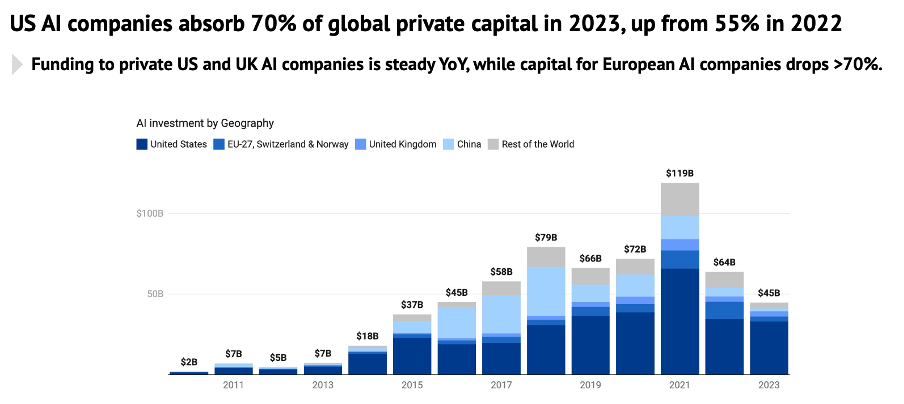

AI investment is surging globally, primarily driven by private sector investment, with the USA taking the lead. The EU has lost innovation leadership due to low research and development (R&D) investment and fewer startups. The problem is the commercialisation of R&D and scaling up. European efforts to boost AI development include programmes such as Horizon 2020, Horizon Europe, the Large AI Grand Challenge within the AI innovation package, and access to supercomputing resources through the EuroHPC JU network.

Regulatory landscape

Numerous AI-specific legal and regulatory measures are emerging worldwide, with many nations yet to enact comprehensive AI legislation. Most countries rely on existing frameworks for regulation, complemented by governance guidelines. As of October 2023, 31 countries had enacted AI laws, while an additional 13 countries are discussing potential regulations. The EU and China are leading in developing comprehensive AI regulations. China has been at the forefront of AI regulation, enacting specific measures for algorithmic bias, the responsible use of generative AI, and more robust oversight of deep synthesis technology. In the EU, considerable attention has been devoted to its AI Act, described by the European Commission as the most comprehensive AI legislation in the world. More recently, USA AI policy has seen the publication of several legally-mandated reforms.

Opportunities and benefits of AI in science

The increasing accessibility of generative AI and other machine learning tools for the analysis of large volumes of data has led scientists across various disciplines to incorporate them into their research. These tools facilitate the analysis of large amounts of text, code, images, and field-specific data, enabling scientists to generate new ideas, knowledge, and solutions. The number of scientific projects incorporating AI proliferates, with successful examples in protein engineering, medical diagnostics, and weather forecasting. Beyond facilitating groundbreaking discoveries, AI is also transforming the daily academic work of scientists, from supporting manuscript writing to code generation.

Accelerating discovery and innovation

AI’s transformative potential extends to accelerating scientific discovery and innovation. The vast amount of research knowledge in natural language format is harnessed through literature-based discovery processes, using existing literature in scientific papers, books, articles, and databases to produce new knowledge. Researchers can now use LLMs to mine scientific publication archives to generate new hypotheses, develop research disciplines, and contextualise literature-based discovery. They can also use advanced search methods, such as those based on deep reinforcement learning, to comb vast search spaces, opening the way to AI-driven discoveries.

Scientific domains relying on large amounts of data seem to have taken up AI to a larger extent in their research processes. The generation of Big Data in these research fields presents a challenge that AI is well-equipped to address. AI algorithms analyse massive, complex, and high-dimensional datasets, enabling researchers to identify patterns and develop new insights. In fields like astronomy, particle physics, and quantum physics, where even a single experiment generates vast amounts of data, AI algorithms identify patterns at scale with increased speed, allowing scientists to discover never-before-seen patterns and irregularities. AI is becoming an indispensable tool for extracting knowledge from experimental data.

AI and machine learning tools can also help bridge the gap between diverse research fields, promoting cross-disciplinary collaborations. By incorporating Big Data analytics using AI, humanities researchers incorporate quantitative measures, diversifying their research and research questions. For example, some historians use machine learning tools to examine historical documents by analysing early prints, handwritten documents, ancient languages, and dialects. Furthermore, AI exhibits potential in advanced experimental control of large-scale complex experiments. For example, physicists are now incorporating AI systems that use reinforcement learning to gain better control over their experiments.

Automating workflows

Traditionally, researchers performed experiments manually, often involving labour-intensive tasks. However, technological advancements enable the automation of a significant portion of experimental workflows. AI is revolutionising experimental simulation and automation, opening up new possibilities for research.

Enhancing output dissemination

AI is also enhancing the dissemination of research outputs. AI-powered language editing empowers non-native English speakers to refine their scientific manuscripts, bridging communication gaps between experts and the public. AI can also simplify the publishing process for newcomers, potentially fostering more inclusive scientific discourse.

Challenges and risks of AI in science

In taking up AI, scientific researchers need to address bias, respect principles of research ethics and integrity, and deal responsibly with issues surrounding reproducibility, transparency, and interpretability.

Limited reproducibility, interpretability, and transparency

The use of AI in science compounds existing concerns about reproducibility, while the opacity of AI algorithms also poses significant challenges to scientific integrity, interpretability and public trust. AI algorithms can generate useful outputs, but their opaque nature makes it difficult to verify the accuracy and validity of research findings. The lack of transparency in AI algorithms hinders reproducibility, as researchers cannot replicate important discoveries without knowledge or understanding the underlying methodological processes. The opacity of AI algorithms raises concerns about accountability and trust, particularly in high-stakes applications such as healthcare.

The increasing prevalence of generative AI models and computer vision systems produced by industry raises concerns about their opacity and the lack of control over human evaluation by academic researchers. Insufficient access to scalable pipelines, large-scale human feedback, or data hinders academic researchers’ ability to assess the safety, ethics, and social biases of machine learning models. The challenge of building state-of-the-art AI models due to the scarcity of computational and engineering resources leads to a reliance on commercial models, limiting reproducibility and advancement outside of commercial environments. The monopolisation of AI capabilities by tech giants raises concerns about their control over the development and application of AI, potentially limiting scientific progress and ethical considerations.

Poor performance (inaccuracy)

Despite their remarkable capabilities, AI models are susceptible to performance issues arising from various factors. One such factor is the quality of training data. The model’s predictions will inevitably suffer if the data used to train an AI model is biased, inaccurate, or incomplete. Additionally, AI models require ongoing updates to maintain their accuracy. Failure to retrain models with current data can lead to outdated algorithms generating inaccurate outcomes.

Another crucial aspect is the representativeness of training data. AI models often learn from data that does not accurately reflect real-world populations. This discrepancy can introduce biases into the model, resulting in erroneous predictions. Finally, the lack of adequate knowledge and training among researchers and developers contributes to performance shortcomings. AI models may be developed and deployed irresponsibly without proper expertise, leading to ethical and legal complications and concerns.

Fundamental rights protection and ethical concerns also arise. AI has the potential to perpetuate existing social biases and discrimination because AI systems trained on historically biased data and thus likely to reproduce these biases in their outputs. This can have a negative impact on people from marginalised groups, who may be unfairly discriminated against. AI systems can also introduce new forms of bias, such as visual perception bias. Machine vision systems may be biased because they are trained on datasets not representative of the real world.

In AI research, industry is now racing ahead of academia. Industry research has greater access to resources, such as data, talent, computing power, infrastructure, and funding, enabling them to take the lead over academia in developing sophisticated AI systems. This can disadvantage smaller institutions and academic researchers, making it more difficult for them to advance research.

AI systems can also raise privacy and data protection concerns since they often collect and process personal data and other, confidential information. There are several other challenges to advancing AI in science e.g. its adverse environmental impacts.

Misuse (malicious actors) and unintended harm

The misuse of AI in scholarly communication can lead to several significant social harms, including the proliferation of misinformation, the creation of low-quality outputs, and plagiarism. It constitutes research misconduct.

AI-generated content can be challenging to distinguish from human-generated content, increasing the risk of spreading misinformation. Predatory journals and paper mills can use AI to create fraudulent research papers. AI can make it easier to plagiarise content, potentially violating copyright and other intellectual property rights. The ease of producing AI-generated content may lead to an increase in the number of irrelevant papers. This can erode trust in scientific findings. AI-based tools can falsify information, which could lead to research misconduct. Using AI to generate content may lower the bar on the required scientific quality of the original work.

Using AI-based tools can automate specific tasks in the peer review process but, unlike human reviewers, cannot properly assess the novelty and validity of research findings reviewers can. As of today, AI still performs poorly in attempting to assess research quality, lacking human reviewers’ deep knowledge, capability of grasping meaning, significance and human understanding. Using AI-based systems to evaluate scientific research may introduce bias and additional errors into the research assessment process.

Societal concerns

The advancement of AI has raised concerns about its potential impact on society. One concern is the unfair appropriation of scientific knowledge, as large tech companies increasingly leverage scientific talent from universities and volunteer developers’ contributions from public code hosting and community platforms. Simultaneously, these companies hold patents and profits for themselves, while controlling access to computing and datasets. Additionally, using copyrighted material as training data for AI models raises concerns about copyright infringement, yet those whose IP rights may have been interfered with lack the capacity or resources to challenge purported infringement and seek redress, while identifying how IP law should apply in these contexts remains unsettled and uncertain.

Another concern is AI’s potential to manipulate and spread misinformation at scale. Additionally, it may pose cybersecurity threats, including malware generation through unsafe code with bugs and vulnerabilities, advanced phishing attacks using LLMs for large-scale deployment, cybercriminals leveraging AI tools for malicious activities or deepfakes and voice cloning leading to impersonation, fraudulent digital content generation and realistic voice scams. Furthermore, AI may impact modern warfare and facilitate bioweapons development.

Impact on scientists and researchers

Research environments, literacy and training

AI can change the research context and environment, automating tasks, enhancing productivity, and liberating researchers from menial tasks. It can also amplify a researcher’s expertise by personalising research tools and tailoring support and assistance to individual needs, preferences, and expertise. This transformation demands adaptation and the acquisition of new skills. To benefit fully from AI, universities and researchers must invest in AI literacy and digital skills, foster a collaborative culture between humans and AI in the framework of human-centred AI, and embrace the dynamic interplay between human expertise and AI augmentation.

AI literacy involves understanding the concepts, abilities, and limitations of AI technology and being able to effectively communicate with it while evaluating its trustworthiness. Ethical awareness, critical thinking, value addition to AI output, and fact-checking are other crucial skills for successful AI integration in research. Adapting to the rapidly changing research environment is crucial for remaining competitive in the field. Several AI teaching programmes exist in Europe to address these needs. These aim to educate individuals in various aspects of AI, from technical knowledge to ethical considerations to help develop a skilled workforce capable of addressing the growing demand for AI expertise across industries and sectors, including research.

Inequalities and biases

AI’s potential to transform research demands a conscious effort to address the geographical disparities in AI access and development and gender imbalances. Researchers should embrace a human-centred approach, mitigate biases, and collaborate with stakeholders to ensure AI’s positive and equitable impact on society.

Impact on researchers

Adopting AI in research careers may lead to negative consequences, undermining mental well-being, increasing job insecurity, pressure, and unfair discrimination. Additionally, using AI for review and selection processes can erode a sense of belonging among researchers. It is important to address these challenges to ensure the appropriate implementation of AI in research.

Evidence-based policy options

Based on these findings, this report identifies five broad challenges that confront EU policymakers that may help to accelerate the responsible and timely uptake of AI in scientific and research communities, thereby supporting European innovation and prosperity. In this context, ‘responsible’ is taken to mean that accelerated uptake of AI should strive to be in accordance with the foundational commitments of scientific research and the foundational values underpinning the EU as a democratic political community and thus ruled by law, ensuring respect for the fundamental rights of individuals and the principles of sustainable development.

The primary challenge that must be addressed in order to accelerate the uptake of scientific research both in AI, and using AI for research, concerns resource inequality between public and private sector research in AI. To foster scientific uptake of AI responsibly, four further challenges must be addressed, concerning:

- scientific validity and epistemic integrity

- opacity

- bias, respect for legal and fundamental rights and other ethical concerns

- threats to safety, security, sustainability, and democracy

This report then sets out a suite of policy options which are directed towards addressing one or more of these challenges. These policy proposals include:

- founding a publicly funded EU state-of-the art facility for academic research in AI, while making these facilities available to scientists seeking to use AI for scientific research, thereby helping to accelerate scientific research and innovation within academia

- fostering research and the development of best practices, benchmarks, and guidelines for the use of AI in scientific research aimed at ensuring epistemic integrity, validity and open publication in accordance with law and conducted in an ethically appropriate manner

- developing education, training, and skills development for researchers, supplemented by the creation of attractive career options for early career AI researchers to facilitate retention and recruitment of talented AI researchers within public research institutions

- developing publicly-funded, transparent guidelines and metrics, using them as the basis for independent evaluation and ranking of scientific journals by reference to their adherence to principles of scientific rigour and integrity. The publication of these evaluations and rankings would be intended to provide a more thorough, rigorous, informed, and transparent indication of the relative ranking of scientific journals in terms of their scientific rigour and integrity than existing market-based metrics devised by industry, helping to identify predatory and fraudulent journals

- establishing an EU ‘AI for social protection’ institute, which engages in information exchange and collaborates with other similar public institutes concerned with monitoring and addressing societal and systemic threats posed by AI in Europe and globally, proactively monitoring and providing periodic reports and making recommendations aimed at addressing threats to safety, security, sustainability, and democracy

Chapter 1. Introduction

We are experiencing the impacts of the disruptive technology of AI permeating across many sectors. As a ‘general-purpose technology’, it is imperative that the EU takes hold of the opportunities, acts upon the challenges, and safeguards people from risks that this fast-evolving technology can generate. As a companion effort to the EU’s regulatory AI Act in progress, which is working to to promote the uptake of human-centric and trustworthy AI while ensuring a high level of protection of health, safety, and fundamental rights (per AI Act Recital 1), the European Commission seeks to understand the peculiarities of AI technology not only developed by science, but as applied to and within science. Specifically, the EU seeks to understand how best to design research and innovation policies that will strengthen its research ecosystem and its competitive profile in a global context. As part of this effort, in July 2023, Margrethe Vestager, Executive Vice-President of the European Commission and acting Commissioner for Innovation, Research, Culture, Education, and Youth, asked the Group of Chief Scientific Advisors to deliver advice on the topic of the successful and timely uptake of AI in science in the EU.

This SAPEA evidence review report gathers the relevant scientific evidence to inform the Advisors’ Scientific Opinion. It addresses issues described in a scoping paper which sets out the formal request for advice from the College of European Commissioners to the Advisors. The aim of this report is to analyse:

How can the European Commission accelerate a responsible uptake of Al in science (including providing access to high-quality Al, respecting European values) in order to boost the EU’s innovation and prosperity, strengthen the EU’s position in science and ultimately contribute to solving Europe’s societal challenges?

Specifically, the report focuses on the key areas provided in the scoping paper to approach the topic through the lens of AI’s impact on:

- the scientific process, including the underlying principles upon which the scientific endeavour is organised and governed

- the people, including the skills, competencies, and infrastructure needed by scientists of tomorrow

- the policy design, with the aim of ensuring timely and responsible uptake of AI in science in Europe

What is scientific research?

Research is the quest for knowledge obtained through systematic study and thinking, observation and experimentation. While different disciplines may use different approaches, they each share the motivation to increase our understanding of ourselves and the world in which we live. […] Research involves collaboration, often transcending social, political, and cultural boundaries, underpinned by the freedom to define research questions and develop theories, gather empirical evidence, and employ appropriate methods (ALLEA, 2023)1

The governance of scientific research is largely undertaken by academics, predominantly through peer-based norms and mechanisms rooted in their widely-shared cultural, political, and professional commitments to science as the quest for knowledge and understanding. Four core norms of scientific research were first introduced by American sociologist Robert Merton, which he called the “ethos of science” rooted in its ultimate institutional goal of extending “certified knowledge” (Merton & Sztomka, 1996). These norms, which Merton described as “institutional imperatives”, are:

- Universalism refers to the impersonal nature of science, in which scientific truth claims are evaluated in accordance with pre-established criteria concerned with evidence and methodology that is independent of the character, identity, or status of those making such claims and consonant with previously-confirmed knowledge. In other words, everyone’s scientific claims should be scrutinised and evaluated equally to establish their “epistemic soundness” (de Melo-Martín & Intemann, 2023), irrespective of the identity or status of the scientist.

- Communism (sometimes referred to as ‘communalism’) refers to the status of scientific knowledge as common property, in which scientific discoveries are collectively owned as the ‘common heritage’ of humanity, underpinning the obligation for scientists to communicate their findings publicly and openly. Common ownership is supported by the institutional goal of advancing the boundaries of knowledge (and by the incentive of recognition which is contingent on publication).

- Disinterestedness requires that scientists work only for the benefit of science, and reflected in their ultimate accountability to their scientific peers, and not for any organisational or other interest.

- Organised scepticism requires that the acceptance of all scientific work should be conditional on assessments of its scientific contribution, objectivity, and rigour (Merton & Sztomka, 1996).

This ideal model of scientific endeavour and its organisation is grounded in a deep commitment to epistemic integrity (de Melo-Martín & Intemann, 2023).2 To this end, the scientific community has developed a set of more specific norms or “principles of research” to “define the criteria of proper research behaviour, to maximise the quality and robustness of research, and to respond adequately to threats to, or violations of research integrity”, and have been enumerated in codes of conduct for research integrity, such as The European code of conduct for research integrity (ALLEA, 2023) and Best practices for ensuring scientific integrity and preventing research misconduct (OECD, 2007). These codes concern various matters including research misconduct, involving the “fabrication (making up results and recording them as if they were real), falsification (manipulating research materials or processes or changing, omitting or suppressing data or results without justification) or plagiarism (using other people’s work and ideas without giving proper credit to the original source”, thus violating the rights of the original author(s) to their intellectual outputs (ALLEA, 2023).

In this report, we proceed on the basis that the ‘responsible’ uptake of science in accordance with European values should be aligned with and serve the institutional goal of science in extending the bounds of certified knowledge that is openly and universally communicated, owned in common and epistemically sound.

Who conducts research?

Although the traditional image of research is that of a university-based academic research group, much contemporary research is carried out in industry and other settings. The organisation of research has changed over time and differs between Europe and the USA (Carlsson et al, 2009). In the 19th century, interdependence emerged between the needs of the growing US economy and the contemporary rise of university education (Rosenberg, 1985). In Europe, the role of the universities was more oriented towards independent and basic research, as manifested by the Humboldt University in 1809. Although basic science was weak in the US until the 1930s and 1940s, research universities emerged after World War II, largely designed as a modified version of the Humboldt system entailing competition and pluralism. The beginning of the 20th century saw the development of the corporate lab, which also conducted basic research (the first corporate lab was set up in Germany in the 1870s).

The close links between industry and science, characterised by collaborative research and two-way knowledge flows, were thus reinforced. At that time, in-house corporate research was much higher in the USA than in Europe, with the employment of scientists and engineers growing tenfold in the US between 1921 and 1940. During the 1940s, there was a huge increase in R&D spending driven by the war, while the following decades saw a decrease in R&D relative to gross domestic product. Basic research diminished, while firms also reduced their R&D spending. In the USA, the situation was reversed during the 1980s, propelled by a number of institutional reforms directed towards IP rights, pension capital, and taxes. Entrepreneurial opportunities were created through scientific and technical discoveries which were paralleled by governmental policies, and which inserted a new dynamism in the US economy. A shift then followed away from large incumbent firms to small, innovative, skilled-labour intensive, and entrepreneurial entities (Braunerhjelm, 2010; Carlsson et al, 2009).

A large range of private entities, varying from small organisations to large and powerful tech and social media giants, are now continuously engaged in research, including AI research. While the motives of commercial research may be profit-driven, it is generally considered important for innovation and economic growth (Quinn, 2021). Accordingly, this report proceeds on the basis that research encompasses the work of a variety of different research communities. In the remainder of this document, we will consider the impact of AI on science, encompassing both the science of AI and the use of AI for scientific research more generally.

What is AI?

In order to proceed, it is necessary first to define what AI is. Unfortunately, there is no commonly-agreed definition of AI, nor a clear taxonomy describing its various branches. AI is a fast-moving field and its recent growth has challenged many of the definitions that have tried to frame it. Yet governments need a common definition to regulate it effectively, making it easier for different countries to work together. With this in mind, recently (November 2023), OECD countries agreed on the following definition:

An AI system is a machine-based system that for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. Different AI systems vary in their levels of autonomy and adaptiveness after deployment.

The above definition has been used in the EU’s AI Act. It emphasises the reactive nature of AI algorithms, producing an output upon presentation of an input, while recent developments hint at the possibility of AI algorithms becoming active systems, acquiring ‘awareness’. AI developments could include acquiring what might be understood as ‘emotional intelligence’ and multiskilling/tasking.

Due to its inherent versatility and broad applicability, AI is considered a general-purpose technology. It has therefore moved from a purely technical field to an interdisciplinary research domain, with multifaceted implications in terms of its use and uptake. Thus, any policies surrounding AI uptake will inevitably impact various domains, including scientific research, although the extent of their impact is likely to differ across domains and contexts.

The trajectory of modern AI started in the early 2010s with the advent of the computational capabilities needed to run deep neural network architectures with millions of parameters, able to process large datasets and extract knowledge from diverse sources, including audio and video signals, displaying for the first time superhuman capabilities, for example in image classification tasks (Krizhevsky et al, 2017). Since then, the frontier has been pushed constantly further ahead, and with transformer architectures (Vaswani et al, 2017) introduced in 2017, natural language processing has made a giant leap. These huge ‘large languagd model’ (LLM) systems, composed of billions of parameters, can now emulate the ability of a human in producing written text, and some researchers are now debating whether we are on course for the achievement of artificial general intelligence (AGI). A framework for the evaluation of AGI has been proposed (Ringel Morris et al, 2023), that is a “form of AI that possesses the ability to understand, learn and apply knowledge across a wide range of tasks and domains. AGI can be applied to a much broader set of use cases and incorporates cognitive flexibility, adaptability, and general problem-solving skills”.

While AGI might still be out of immediate reach and LLMs might not be the way to achieve AGI, at least in their present form, the capabilities of LLMs continue to impress, especially when processing multimodal data (text, images, videos, sounds) and when they are integrated with other types of AI, such as generative AI models like stable diffusion, reinforcement learning, and more. The recent release of Sora, the OpenAI application that generates high quality videos from textual prompts is such an example. As a result, recent years have witnessed a significant rise in LLM research and development activities and growing academic, scientific and public interest in the field.

Given this trajectory of exponential increase in the capabilities of AI, substantial impacts are expected in practically every domain where data can be digitised and fed into an AI system. In this report, particular relevance is therefore attributed to the impact of generative AI, with a specific focus on LLMs, in the domain of scientific advances and innovation in AI research, since enhancing the productivity of knowledge discovery returns a manifold of applications in all other domains.

Report structure

In Chapter 2, we discuss the preconditions and the context for the application of AI to research, namely the availability of skilled researchers and the necessary infrastructure to develop state-of-the-art AI algorithms, including the access to trustworthy data for training AI models. We also analyse the geopolitical and economic context, conditions, the availability of access to human resources and computational infrastructures, and then we provide a brief examination of the current regulatory landscape, which is rapidly evolving.

Against this background, Chapter 3 reviews the evidence that demonstrates the potential benefits and novel opportunities for the future use of AI in the scientific discovery process, such as the automation of scientific workflows and the AI-enhanced exploration of scientific literature.

Chapter 4 then identifies potential challenges and risks, including potential misuses and abuses, besides the fundamentally unsolved issue of the accuracy and explainability of some AI research (and AI-enabled research), including the most novel AI architectures.

In Chapter 5, the focus shifts to the people behind the scientific discovery process and how researchers are affected by the mounting wave of AI applications in their respective research areas: in particular, how can we promote a collaboration between the human and the machine avoiding the pitfall of relinquishing the driving seat to the latter? For this purpose, the role of education as the key to foster a synergistic collaboration is analysed.

Finally, Chapter 6 identifies a suite of policy options aimed at addressing the challenges identified in this evidence review that currently hinder the successful and responsible uptake of AI in science.

At the end of each chapter, we gather the key findings from the evidence reviewed. These key findings are organised to highlight the level of uncertainty associated with the evidence gathering to support them.

Chapter 2. Landscape of AI research & innovation

Data and computational infrastructure

The growth in AI is enabled by increases in computing power (the hardware, also called compute), open-source software platforms, and the abundant availability of Big Data. In particular, the advent of generative AI has radically heightened the demand for training data and for computational infrastructure.

The development of generative AI

The introduction of transformer architecture has enabled the parallelisation of computational processes during AI training, enabling training on much larger datasets than previously possible, and thus the scaling up of AI language models.

However, training and running LLMs requires significant amounts of processing power. Not only does this require large amounts of capital to invest in or rent the necessary hardware, such as powerful graphics processing units (GPUs) or expensive purpose-built chips, but it also requires the professionals who possess the skills and the experience to operate complex neural networks on large clusters of hardware (Luitse & Denkena, 2021).

The release of Generative Pretrained Transformer-3 (GPT-3) by OpenAI in June 2020 sparked a significant surge in interest in LLMs, driven by its remarkable human-like language generation capabilities. Instead of releasing the model as open source, like its predecessors, OpenAI introduced an API through which accepted users can access it as a running system to generate textual output, introducing a pricing plan two months later following the conclusion of its ‘beta’ phase during which users could test the service free of charge. This marked a distinct move away from open-source release, in which OpenAI operates GPT-3 as a closed system and controls its accessibility. In this way, Mayer has described this as creating a model of “unique dependence” (Mayer, 2021) as it no longer allows developers to view, assess or build on top of GPT-3. However, despite the real power of models such as GPT-3, it took roughly three more years for the power of LLMs to widely reach public attention, something that happened only in late 2022 with the release of ChatGPT. 2023 was a breakout year for generative AI, with leading tech companies releasing their LLMs. This also includes a large number of open source LLMs, over 1000 of which were available on the HuggingFace platform.3

Apart from text generation capabilities, leading LLMs have gained multimodal capabilities across image, audio, video, tabular data, and text understanding (Chui, 2023). These capabilities include, among others (Gemini Team et al, 2023; OpenAI et al, 2023; You et al, 2023):

- understanding and reasoning across multiple data modalities (text, images, video, audio, and programming code)

- automatic speech translation

- video question answering

- reasoning about user intent

- solving visual puzzles

- source code generation for specific tasks

- reasoning in maths and physics

Parallel to generative language models, image generative models have been actively developed, with state-of-the-art models coming from tech companies and AI startups. This group of models performs text-to-image translation (e.g. DALL-E, Midjourney) or text-to-video translation (Girdhar et al, 2023; Ho et al, 2022), which is a process of synthesising a photo-realistic image or a short video corresponding to a textual description (so-called ‘prompt’). The use of generative models expands to audio as well. Solutions exist to generate sounds and music based on textual prompts and/or input melody (Copet et al, 2023; Kreuk et al, 2022).

Hardware and software

The majority of AI developers, both in academia and industry, use open-source software frameworks to develop AI systems: PyTorch, Tensorflow, Keras, and Caffe can be used within Python and R to effectively develop and deploy advanced AI systems. But this is the only vertex of the software-data-hardware simplex where academia and industry stand on equal footing. The increasing compute needs of AI systems create more demand for specialised AI software, hardware, and related infrastructure, along with the skilled workforce necessary to use them. As government investments are constrained, compute divides between the public and private sectors can emerge or deepen. The massive expansion of the digital economy in the last two decades has become the object of social scientific research, including the “political economy of AI” (Srnicek, 2016; Zuboff, 2019). These studies investigate the dynamics of competition and the consolidation of power in the digital era. Although scholars have adopted a variety of theoretical frameworks, they all draw attention to the concept of the “digital platform”, through which large tech firms position themselves as intermediaries in a network of different actors, allowing them to extract data, harness network effects and approach monopoly status (Luitse & Denkena, 2021).

The expansion of private sector monopoly power can worsen the disparity between public and private sector research, because the public sector increasingly lacks the resources to train cutting-edge AI models. Industry, rather than academia, is increasingly providing and using the compute capacity and specialised labour required for state-of-the-art machine learning research and training large AI models. This trend points to the need to increase access to facilities like high-performance computing and software to support the development of AI in public science.

For example, the Stanford AI Index Report 2023 draws attention to the increasing computational power needed for complex machine learning systems. Since 2010, language models have demanded the greatest use of computational resources. More compute-intensive models also tend to have more significant environmental impacts; training AI systems can be incredibly resource-intensive, although recent research has shown that AI systems can be used to optimise energy consumption (Maslej et al, 2023). Industry players tend to have greater access to computational resources than others, such as universities, as demonstrated in Figure 1, which highlights the amount of ‘compute’ by sector since the 1950s.

According to the Stateof.ai Report 2023, produced by Epoch AI:

Governments are building out compute capacity but are lagging private sector efforts. Currently, the EU and the USA public research bodies are superficially well-placed, but Leonardo and Perlmutter, their national high-performance computing (HPC) clusters, are not solely dedicated to AI and resources are shared with other areas of research. Meanwhile, the UK currently has fewer than 1000 NVIDIA A100 GPUs in public clouds available to researchers.

The same report adds that companies such as Anthropic, Inflection, Cohere, and Imbue are shoring up NVIDIA GPUs and wielding them as a competitive edge to attract customers (Stateof.ai Report 2023, slides 131 and 72). This investment in compute is made possible by the enormous market capitalisation of AI startups, especially in the USA, as shown in Figure 2.

Newcomers, startups, and even AI research laboratories rely on the computing infrastructure of Microsoft (Azure), Amazon (AWS), and Google (Google Cloud) cloud services to train their systems and use these companies’ extensive consumer market reach to deploy and market their AI products (Kak et al, 2023; Rikap, 2023c, 2023d), thus reinforcing the model of unique dependence.

Data

Besides compute (hardware) and software, the most important resource for the successful development of AI systems is data.

Unlike software, much of which is open source, at least for what concerns the development frameworks for AI systems, a considerable body of valuable data is protected by copyright law. In addition, research undertaken at public research institutions is bound to comply with principles of research ethics, creating additional hurdles that must be met in order for that data to be accessed and processed by public research institutions. In the USA, for example, the New York Times has sued OpenAI for the alleged use of copyrighted material for training their GPT series LLMs, and we can expect further litigation of this kind. Although European law differs from US law, providing mechanisms by which copyright owners can reserve their rights for their copyright-protected work to be excluded from data mining by others, these procedures are criticised as unwieldy, impracticable, and difficult to enforce. Accordingly, the need to provide fair and equal access to data, while preserving privacy and ownership rights, remains an ongoing and fraught challenge. Although the EU is already active in this policy space, the issues remain contested and unresolved.

However, it is worth noting that even opening access to all currently available data might not be enough to address the needs of the most data-intensive AI algorithms, according to some claims. In particular, the Stateof.ai Report 20234 claims that “we will have exhausted the stock of low-quality language data by 2030 to 2050, high-quality language data before 2026, and vision data by 2030 to 2060”. Notable innovations that might challenge this claim are speech recognition systems such as OpenAI’s Whisper that could make all audio data available for LLMs, as well as new optical character recognition models like Meta’s Nougat.

Geopolitical economy of AI

To understand the context in which AI technologies are being taken up in research communities in Europe, it is helpful to understand the larger geopolitical and economic landscape and dynamics in which AI research and innovation are proceeding. For this, we briefly analyse the main actors in the development of AI systems and in the research about them, and then we review how such R&D efforts are funded. On this basis, we review the geopolitical implications.

Who develops AI systems?

AI research is on the rise across the board (affiliated with education, government, industry, non-profit, and other sectors), with the total number of AI publications more than doubling since 2010 (Maslej et al, 2023, pp. 24–28). Most significant machine learning systems were released by academia until 2014, but industry has since overtaken academics with 32 significant industry-produced machine learning systems compared to just three produced by academia in 2022. This could be attributed to the resources needed to produce state-of-the-art AI systems, which ‘increasingly requires large amounts of data, computing power, and money: resources that industry actors possess in greater amounts compared to nonprofits and academia’ (Maslej et al, 2023, p. 50). The Stanford AI Index Report 2023 estimates validate popular claims that large language and multimodal AI models are increasingly costing millions of dollars to train. For example, Chinchilla, an LLM launched by DeepMind in May 2022, is estimated to have cost $2.1 million; BLOOM’s training is thought to have cost $2.3 million (Maslej et al, 2023, p. 62); and PaLM, one of the flagship LLMs launched in 2022 and around 360 times larger than GPT-2, is estimated to have cost 160 times more than GPT-2 at $8 million (Maslej et al, 2023, p. 23).

The highest number of notable machine learning systems originated from the US, totalling 16, followed by the UK with 8 and China with 3. Since 2002, the US has consistently surpassed the UK, EU, and China in terms of the overall quantity of significant machine learning systems produced (Maslej et al, 2023).

Who researches AI systems?

A bibliometric analysis of 815 papers published on AI and innovation in the areas of social science, business management, finance and accounting, decision science, and economics and econometrics as well as multidisciplinary areas revealed that 418 contributing authors were based in the USA, followed by China (229), the UK (125), and India (106) (Khurana, 2022). Cumulatively, authors from the EU and associated countries made a significant contribution, with 65 authors based in Italy and Spain each, 50 in Germany, 41 in Sweden, 33 in the Netherlands, 30 in France, 23 in Finland, 21 in Switzerland, and between one and 18 authors in other European countries.

Similar results were observed in another study on AI and innovation in business, management and accounting, decision science, economics, econometrics, and finance, where out of the 1448 identified records, 227 originated in the USA, 151 in China, 125 in Italy, 123 in Germany, and 119 in the UK (Mariani et al, 2023).

A bibliometric study of 5890 AI-related articles published in 2020 and 2021, during the COVID-19 pandemic, found that the top countries were China (2874 records), the USA (895 records), and the UK (430 records), closely followed by Australia (426 records). France and Germany were the countries of origin of 182 and 181 papers respectively (Soliman et al, 2023).

Similarly, authors based in the USA, the UK, and Canada produced the most research on AI in healthcare, followed by authors from Germany, Italy, France, and the Netherlands as well as China and India (Bitkina et al, 2023; Zahlan et al, 2023), while the USA, China, the UK, Canada, India, and Iran dominate research on AI in engineering, with a significant number of papers being produced in Germany and Spain (Su et al, 2022; Tapeh & Naser, 2023).

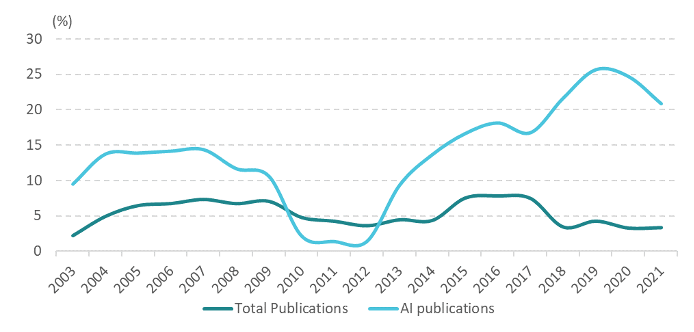

An important source of data is a bibliometric analysis undertaken by the European Commission’s Directorate-General for Research and Innovation (European Commission, Arranz, et al, 2023). According to this analysis, the field of AI is growing at a faster rate than that of scientific production as a whole: global scientific activity has grown at around 5% per year between 2004 and 2021, while the annual growth rate of AI-related publications has been around or above 15%, except for 2010–2012 (Figure 3).

In 2022, the USA led in the number of authors contributing to significant machine learning systems, boasting 285 authors. This figure is more than double the count in the UK and nearly six times that in China. In the last decade, the USA has outpaced both the EU and the UK, as well as China, in terms of private AI investment and the number of newly-funded AI companies. Since 2015, China has outpaced the EU in private AI investment (Maslej et al, 2023).

The Stateof.ai Report 2023 shows that over 70% of the most cited AI papers in the past 3 years have authors from USA-based institutions and organisations. The top 3 organisations with the most cited AI papers are also industry leaders, namely Google, Meta, and Microsoft, ahead of USA-based universities (Figure 4).

Funding AI research and development

There is no systematic way of tracking AI funding across countries and agencies. However, a study by the European Commission Joint Research Centre’s TechWatch estimated that 68% of AI investment in 2020 came from the private sector, with 32% from the public sector. Private sector investment was also growing at a faster rate (Dalla Benetta et al, 2021).

Europe is struggling to see the same level of private investments in AI as the US, and therefore is exploring a number of measures to kindle the development of AI companies and new AI products and innovations. European funding programmes such as Horizon 2020 and Horizon Europe support a large number of AI research projects. Since 2014, projects focusing on AI or using AI tools are estimated to have received €1.7 billion in EU funding. AI projects funded under the Horizon Europe programme are represented by academia and industry from multiple countries, aiming to boost collaboration in R&D across the EU.

The European Commission is also tackling the funding issue with a strategic approach outlined in the AI innovation package, which addresses the expected needs of startups and small and medium-sized enterprises. The first action of this programme has been to launch the Large AI Grand Challenge, a prize giving AI startups financial support and supercomputing access.

Finally, the EU is also funding access to supercomputing resources through the EuroHPC JU network, comprising eight supercomputers, two supercomputers still to be deployed, and six quantum computers.

AI as a geopolitical asset

AI has become a geopolitical asset. Building-blocks of AI technology act as geopolitical bottlenecks, impeding the development of the technology and its deployments in regions that do not have access. In particular, talents and computing power are strong limiting factors for the development of AI (Lazard, 2023).

Large AI models are attracting growing public investment. However, because of the growing power of these AI models, and because they lack transparency, it has become more and more difficult to balance out the power concentration (Miailhe, 2018). These limitations impact researchers on AI in the public sector who have limited or conditional access to the technology, and the uptake of AI in science, due to the unfair appropriation of scientific knowledge (see Chapter 4).

The Global AI Index ranks the performance of countries in AI, analysing absolute and relative measures including indicators on the implementation of AI systems, the level of innovation, and the amount of investments. The index delivers profiles for individual countries and an overall ranking arranged according to the final index scoring. The USA and China top the rankings, with EU countries following behind – but from the EU, only Germany, Finland, the Netherlands, France, Denmark, Sweden, Luxembourg, and Austria make the top 20. The UK is ranked fourth and Switzerland ninth.

A European Parliament Research Service report (EPRS, 2022) suggests that the EU has lost its innovation leadership due to low R&D investment and a small number of startups. The report attributes these issues to the commercialisation of R&D and scaling-up challenges. It offers an analysis of challenges that, if appropriately addressed, may help accelerate the responsible uptake of AI in science in accordance with European values, that might strengthen European science. To that end, it identifies policy options that are aimed at strengthening both scientific research in AI and the use of AI by European scientists more generally. These policy proposals are broadly concerned with:

- appropriate training, education, and development programmes

- the development of guiding principles and standards for the use of AI in science in accordance with the basic precepts of scientific rigour, integrity, and openness

- investment, infrastructure and institutional changes that may be necessary to establish the broader socio-technical, political and economic conditions for scientific research in and with AI to flourish in Europe.

As well as looking at state aid exemptions and corporate tax incentives for R&D expenditures, the report suggests, for example, promoting digital innovation hubs that act as ‘one-stop shops’ to provide services, from access to critical infrastructure and testing facilities to incubation and acceleration. These measures could promote the translation of research to commercial opportunities and the commercialisation of R&D by industry (EPRS, 2022).

The EU faces the challenge of researchers migrating to other regions, notably the US, leading to a brain drain. According to Khan (2021), five factors contribute to this outflow of human capital:

- attractive salaries outside Europe

- short-term fixed contracts for early career researchers

- unfair recruitment procedures

- appealing migration policies

- internationalisation policies that encourage permanent mobility

Though contributing to innovation across different fields, immigration’s impact on STEM patent generation in the US appears particularly strong compared to arts and social sciences (Bernstein et al, 2022).

Regulatory landscape

AI-specific legal and regulatory measures are proliferating, reflected in the creation of laws and policy frameworks across many countries that are specifically directed at regulating AI. According to the Stanford AI Index Report 2023, which examined the legislative records from 127 countries, only one bill directly concerned with the regulation of AI passed into law in 2016, but by 2022, the number rose to 37 (Maslej et al, 2023).

The year 2016 marked a critical turning-point in public debate about AI, commonly referred to as the ‘techlash’ (“a strong and widespread negative reaction to the growing power and influence that large technology companies hold”, a term first introduced into the Oxford English Dictionary in 2018). In particular, public revelations concerning the Russian use of social media platforms to interfere with the 2016 USA elections, Cambridge Analytica’s misuse of Facebook data for political micro-targeting, and the opening of investigations against Google for alleged antitrust violations, highlighted how AI applications can damage vital individual rights and collective interests, threatening the integrity of democratic procedures. Until 2016, policymakers worldwide had largely accepted that self-regulation could be relied upon to address adverse impacts arising from AI applications, reflected in the multitude of ‘ethical codes’ promulgated by members of the tech industry, either individually or as various consortia (Rességuier & Rodrigues, 2020; Yeung et al, 2019).

Legislative proposals specifically concerned with the regulation of AI have subsequently emerged throughout the world, but the content and scope of these measures display considerable variation. Although the EU and China are taking the lead in developing comprehensive AI regulations, more recent US AI policy has seen the publication of a number of legally-mandated reforms. For example, on 30 October 2023, President Biden issued a sweeping executive order on AI with the goal of promoting the “safe, secure, and trustworthy development and use of AI”, which applies to executive branch authorities only, and relies extensively on the US National Institute of Standards and Technology to develop guidelines and best practices. Several weeks later, the AI Research, Innovation, and Accountability Act of 2023 was introduced, supported by key members of the Senate Commerce Committee from both parties. It seeks to:

- introduce legislative initiatives to encourage innovation, including amendments to open data policies, research into standards for detection of emergent behaviour in AI, and research into methods of authenticating online content

- establish accountability frameworks, including key definitions, reporting obligations, risk-management assessment protocols, certification procedures, enforcement measures, and a push for wider consumer education on AI

China has also introduced AI laws, comprised of a series of more targeted AI regulations, enacting specific measures for algorithmic bias, the responsible use of generative AI, and more robust oversight of deep synthesis technology (synthetically generated content) arising by the Algorithmic Recommendation Management Provisions (2021), Interim Measures for the Management of Generative AI Services (2023), and the draft Deep Synthesis Management Provisions (2022). These measures are regarded as laying the intellectual and bureaucratic groundwork for a comprehensive national AI law that China is expected to release in the coming years. These regulations aim to prevent manipulation, protect users, and ensure AI’s responsible development and use while enabling Chinese regulators to develop their bureaucratic know-how and regulatory capacity.

In the EU, considerable attention has been devoted to its AI Act, described by the European Commission as the most comprehensive AI legislation in the world. The text of the Act that will form the basis of the vote in the Permanent Representatives Committee on 2 February 2024 was leaked on 22 January 2024 and was officially released to Member State delegations on 24 January 2024. Its stated purpose is to establish a uniform legal framework for the development and deployment of AI systems in the EU in conformity with European values to “promote the uptake of human-centric and trustworthy AI while ensuring a high level of protection for health, safety, fundamental rights enshrined in the Charter including democracy, the rule of law and environmental protection against the harmful effects of AI systems in the Union and to support innovation” (AI Act, Recital 1, paragraph 11). It adopts a so-called “risk-based” approach, such that the higher the risks associated with the AI system in question, the proportionately more demanding legal requirements. To this end, it classifies “AI systems” into four classes:

- prohibited practices, considered to pose an unacceptable risk to the EU’s values and principles, such as those that manipulate human behaviour or exploit vulnerabilities, and are therefore banned

- “high risk” AI systems that are used in critical sectors or contexts, such as health care, education, law enforcement, justice, or public administration

- “general-purpose AI models”, defined as AI models, including those trained with a large amount of data using self-supervision at scale, that “display significant generality and are capable to competently perform a wide range of distinct tasks”. These systems, and the models upon which they are based, must adhere to transparency requirements, including drawing up technical documentation, complying with EU copyright law and disseminating detailed summaries about the content used for training. If these models meet certain criteria, their developers will have to conduct model evaluations, assess and mitigate systemic risks, conduct adversarial testing, report to the Commission on serious incidents, ensure cybersecurity, and report on their energy efficiency

- “limited or minimal risk” AI systems, which are subject to transparency obligations, such as informing a person of their interaction with an AI system and flagging artificially generated or manipulated content

AI systems that do not fit within these categories fall outside the scope of the Act.

The Act also seeks to establish new institutional and administrative reforms, including:

- An AI Office within the Commission. It will oversee the most advanced AI models, help develop new standards and testing practices, and oversee the enforcement of common rules in all EU member states. Some commentators anticipate that its role will become equivalent to the AI Safety Institutes that have recently been announced in the UK and the US.

- A scientific panel of independent experts to advise the AI Office about general-purpose AI models, and to contribute to the development of methodologies for evaluating the capabilities of foundation models and monitor possible material safety risks related to foundation models, when high-impact models emerge.

- An AI Board, which comprises EU member states representatives, to remain as a coordination platform and an advisory body to the Commission while contributing to the implementation of the AI Act (e.g. designing codes of practice).

- An advisory forum for stakeholders, to provide technical expertise to the AI Board.

Despite these institutional innovations, the entire foundation of the regime is based on the EU’s existing ‘New Legislative Framework’ approach to product safety. Although developers and deployers of these systems must ensure that their systems comply with the ‘essential requirements’ specified in the Act (for ‘high risk’ systems, these concern data quality, transparency, human oversight, accuracy, robustness, security, and the maintenance of suitable ‘risk management’ and ‘quality management’ systems), the Act provides that European standardisation bodies (notably CEN/CENELEC) may establish “harmonised standards” for AI. This standard-setting work is currently underway. If formally adopted by the European Commission (through notification in the Official Journal), firms that voluntarily comply with these standards will benefit from a presumption of conformity with the Act’s essential requirements.

Yet these technical standards are not, and will not be, publicly available on an open-access basis: because CEN/CENELEC are non-governmental voluntary organisations through which technical standard-setting is undertaken by volunteer experts, the standards are protected by copyright. Nor is the technical standard-setting process subject to the conventional legal procedures of democratic consultation and oversight. Thus, although civil society bodies have broadly welcomed the EU’s initiative, they have expressed significant criticisms of what they regard as deficiencies in the opportunities it provides for democratic participation while failing to provide meaningful and effective protection for the protection of fundamental rights, consumer safety, and the rule of law (Ada Lovelace Institute, 2023a; ANEC, 2021; BEUC, 2022; Micklitz, 2023).

However, it is important to situate the EU’s AI Act within its broader digital strategy. The EU has been at the forefront of establishing a legal framework to protect individuals’ personal data, with the General Data Protection Regulation as its centrepiece. Its ‘digital strategy’ is intended to supplement the EU’s data and digital framework with a new set of rules aimed at fostering data flow, data access, and the data economy introduced by the Data Act and the Data Governance Act, which will apply to both personal and non-personal data, including machine and product data. It also introduces enhanced legal obligations and user protections for online platform services, online hosting services, search engines, online marketplaces, and social networking services under the Digital Markets Act and the Digital Services Act. Accordingly, the AI Act must be understood within this broader digital policy landscape, particularly given the role of data as a critical input for AI development and technologies. A discussion of these elements is beyond the scope of this report.

We have already noted active contestation and uncertainty about the scope, role, and limits of copyright law, given that many of the latest ‘foundation models’ used for generative AI applications rely on ingesting massive volumes of data scraped from the internet, including works that are ostensibly subject to copyright protection. However, IP law is a highly specialised field of legal protection. Accordingly, identifying and applying the content and contours of copyright law has become increasingly fraught as the size, significance, and sophistication of digital technologies and the digital economy has grown in recent years, particularly since IP laws are typically jurisdiction-specific. It is widely recognised that IP laws serve an important purpose that enables innovation to flourish, by conferring property rights on the creators of original works which are legally enforceable. Yet IP legal theorists also recognise that the goal of IP law should be to strike an appropriate balance between the interests of authors of original content in the temporary monopoly which IP rights create (thus recognising their interests and investments in their own creation, the personality of the authors, etc.) and the protection of certain other interests, such as public access to knowledge and information. In this way, copyright can foster creativity, innovation, and socioeconomic welfare. Thus, various European laws contain specific carve-outs to allow for scientific and other kinds of research. For example, the General Data Protection Regulation provides special provisions for the processing of personal data for “archiving purposes in the public interest, scientific or historical research purposes or statistical purposes” (GDPR article 89), while the EU’s Digital Single Market Directive allows two text/data mining exceptions. Article 3 introduces a mandatory exception under EU copyright law which exempts acts of reproduction (for copyright subject matter) and extraction (for the sui generis database right) made by “research organisations and cultural heritage institutions” in order to carry out text and data mining for the purposes of scientific research, while Article 4 mirrors Article 3 with one major difference: it is available to any type of beneficiaries for any type of use, but these can be overridden by express reservation via right holder ‘opt-out’ (see, for example, Margoni & Kretschmer, 2022).

Most countries do not have comprehensive AI legislation, laws, or policies tailored explicitly to AI. Instead, AI operates within existing legal and regulatory frameworks, complemented by governance frameworks, supporting acts, and guidelines (Australia, India, Israel, Japan, New Zealand, Saudi Arabia, Singapore, South Korea, United Arab Emirates, UK, USA). As of October 2023, 31 countries have enacted AI legislation, while an additional 13 countries are currently discussing and deliberating on AI laws.

Key findings

Little uncertainty

These key findings are supported by a large body of evidence and systematic analyses. There is little uncertainty.

- AI research is characterised by a strong leadership of AI research activities and infrastructure development by industry. This has implications for the practice of research itself.

- AI research and research using AI require large amounts of infrastructure. The largest AI infrastructures are located outside Europe.

Some uncertainty

There is some evidence to support these key findings, but some uncertainty exists.

- Across the globe, the regulatory landscape around AI is highly dynamic. In Europe, the EU AI Act aims to become the most comprehensive AI legislation in the world.

- AI research and the use of AI in research are highly impacted by the strong economic and geopolitical interests in AI.

Chapter 3. Opportunities and benefits of AI in science

This chapter outlines currently available evidence on the uses of AI to support or enhance research work, through applications of AI across the scientific process. The evidence was curated to include the most relevant and successful uses of AI in science at this time and exclude controversial evidence that still needs to be better understood and investigated. Finally, this chapter highlights that the advances in AI science and technology will lead to further potential uses of AI in research, and that there are currently no comprehensive evaluation studies about the impact of AI on the science system as a whole.

AI is increasingly used throughout science

The use of AI in science is not new. However, scientists everywhere are incorporating generative AI and machine learning tools in their research due to increased accessibility. With tools to analyse large quantities of text, code, images, and field-specific data, AI technologies facilitate the generation of new ideas, knowledge, and solutions. For example, in the last three decades, the percentage of AI-related publications has increased from less than 0.5% to 4% of all publications in health and life sciences, social sciences and humanities; from less than 1% to 10% in the physical sciences. Meanwhile, 30% of computer science publications were AI-related in 2022 (Hajkowicz et al, 2022).

The number of scientific projects incorporating AI is growing, with successful examples in protein engineering, medical diagnostics, humanities and weather forecasting. AlphaFold, an AI tool developed by DeepMind, predicts structures of thousands of proteins with more than 90% accuracy, tremendously accelerating scientific productivity: it previously took years of study for a PhD student to explore the three-dimensional structure formation of a single protein (Jumper et al, 2021). Recently, a new family of antibiotics was found with the help of AI technologies, a significant advance in drug design (Wong et al, 2023).